Cite as: Stefanescu, D., 2020. Alternate Means of Digital Design Communication (PhD Thesis). UCL, London.

2.1 Introduction

Design is a broad term. It is simultaneously a detailed process that involves a wide array of disciplines and, compounding the problem, affects an even larger number of actors that do not necessarily fit within the categorisation of a specialist profession. The given research topic, digital design communication, adds another dimension to the scope of the investigation, namely technology and its shaping (while being shaped by) the means towards which it is applied. All of these parts are well established fields of research in their own and this research thesis will not exhaustively delve into them; the aim within this chapter is to cross-reference key concepts, ideas and practices from the fields of sociology, communication, design, and computer science to describe a relevant context for this investigation. The value of communication lies in the connections it enables between participants; similarly, the value of this chapter lies in the transversal linkages it makes between communication, digital technologies, and the design industries.

The following review is structured in three main parts, namely Divergence and Specialisation (Section 2.2), Design and Convergence (Section 2.3), and Design and Communication (Section 2.4). Section 2.2 aims to explain the process of modern societal differentiation and introduce the resulting ontological divergence of disciplines (and corresponding stakeholders) and its implications on communication. Subsequently, Section 2.3 reviews scholarly work that, in a design context, investigates the complexity of multi-stakeholder interaction and the way shared understanding emerges through iterative ontological displacement. This is followed by an analysis of existing communication models in Section 2.4. Finally, Section 2.5 looks at existing work in digital design communication, starting from early work done in the 1970s to current standards emerging from practice as well as industry groups and links this research to previous applications in collaborative design software and current gaps in knowledge.

Communication is an essential activity that permeates the design process in all its aspects, from ideation to materialisation. Contrary to popular representations, design is not just an isolated act of creation—or a manifestation of genius on behalf of one single author—but an inherently collaborative process in which a great number of people, with vested interests and from various disciplines, participate. These people—the design stakeholders—through their interaction both define as well as resolve design problems.

For example, a small single-family home project will involve as design stakeholders a client, an architect, a construction company and the local planning authority. Nevertheless, even this simple scenario—involving at first sight just four stakeholders—has hidden interactions that, normally, are not associated with the design process, yet have a huge influence on it: the client’s interactions with his bank, which finances the whole endeavour and decides the overall project time, the planning authority’s regulations regarding specific usage of local materials and their impact on the budget and on the choice of construction company, etc. For larger projects, such as an apartment block, office building or train station, the number of involved stakeholders and their interactions grow as more specialised disciplines are needed to provide expertise: structural and mechanical engineers, environmental analysts, specialised modelling consultancies, infrastructure planners, financial consultancy companies that orchestrate budgets, contractors and manufacturing companies, etc. Not only does the technical group of stakeholders grow, but so does the non-technical one: communities, business and political actors have the right to be informed and, to a certain extent, have a say, in the development of a project that affects their surroundings and livelihoods.

All the actors mentioned above exchange information and communicate. Their actions shape the built environment, piece by piece—project by project. This degree of high technical specialisation and social complexity allows for ever greater and more complicated problems to be tackled, yet it does not come without friction, conflict and errors which ultimately may lead to unsatisfactory design problem resolutions. In order to understand these issues and how they reflect and influence communication in the design process, it is necessary to look into the mechanisms behind the functional specialisation and differentiation of the current modern society: where do these stakeholders come from, and how do they interact?

2.2 Differentiation and Divergence

2.2.1 Industrial and Scientific Specialisation

Functional differentiation is a process of progressive diversification of societal systems that explains the transition from primitive hunter-gatherer societies to agricultural ones, and subsequently modern society. The division of labour, as an analogue process, is a well-established concept with a long history of intellectual debate as to its positive and negative aspects. At its core lies the simple notion that individuals will perform better at a given task—with greater efficiency and producing higher quality output—if able to specialise in a specific aspect of the problem (Durkheim, 1997; Kant, 2002; Smith, 2017). In the words of Kant,

“All trades, handicrafts, and arts have gained through the division of labour, since, namely, one person does not do everything, but rather each limits himself to a certain labour which distinguishes itself markedly from others by its manner of treatment, in order to be able to perform it in the greatest perfection and with more facility. Where work is not differentiated, where everyone is a jack of all trades, the crafts remain at an utterly primitive level.”(Kant, 2002).

Within the contemporary domain of design and engineering, this industrially driven differentiation is easily apparent in the construction stage of project: usually led by a general contractor, the building process is split and delegated to a network of sub-contractors, sub-sub-contractors, and manufacturers whose responsibility is bounded to their area of expertise (e.g., foundation excavation, rebar placement, window mounting, electrical systems, etc.). Let’s take the example of a suspension bridge. The mathematical and physics knowledge (calculus, load distribution, cable tensions, vibration analysis, etc.), coupled with the manufacturing expertise needed to produce the necessary building components with the correct physical specification (steel cables, towers, anchorage, etc.) and the operational science of constructing such an engineering project, are beyond the powers of one single individual: each aspect involves its own domain and requires years of study and practice to master.

Industrial specialisation and labour division explain only one part of the diversity of stakeholders in the design process. The suspension bridge example hints at a different dimension to the process of differentiation, namely one that operates at the level of scientific knowledge. Thomas Khun, in his book The Structure of Scientific Revolutions (Kuhn, 1962), studied this phenomenon in depth. He describes the usual development pattern of modern science as one of successive transitions from one paradigm to another via scientific revolutions. Consequently, he associates this cyclical pattern of disruption with all intellectual advances thereafter, including Newton. Previously, from antiquity onwards and up to the 17th century, he argues that such a dynamic did not exist: schools of thought coexisted and derived strength from their association with a particular set of phenomena that their own theory could explain better than the others. Most importantly, scholars would take no belief for granted and would rebuild their field anew from its foundations: a pattern, Khun argues, that is incompatible with significant discovery and invention as scientific knowledge does not accrete. In the context of the previously mentioned suspension bridge, it would be akin to rediscovering from scratch, every time a suspension bridge is built, the basic mathematical principles of solid mechanics, such as Young’s elastic modulus or Hooke’s law.

Khun argues that these inefficiencies triggered the evolution of the modern, institutionalised, process of scientific discovery. Formal definitions of scientific groups meant that an individual no longer need to rebuild the respective field’s foundations but could build upon a previous body of knowledge. Consequently, the generally addressed literature which was prevalent in previous centuries, such as Darwin’s On the Origin of Species, slowly evolves into specialised literature that is legible only to the respective specialists, or initiates of that respective group. Scientific differentiation, i.e., the emergence of new scientific groups centred around a new paradigm, happens by virtue of what Khun describes as “novelties”. Novelties are discoveries or inventions inadvertently produced by “a game” (scientific domain) played under one set of rules (paradigm) that, in order to be assimilated, require the elaboration of another set. For example, classical physics, originally one scientific domain, speciated into several distinct areas of research by the 20th century, namely mechanics, acoustics, optics and electromagnetism. In the contemporary design process, this scientific specialisation can be clearly seen in the composition of a team for a building project: alongside the architect there are structural, mechanical, electrical and civil engineers, in turn supported by specialists from space planning, urban design, landscape design as well as geotechnical engineering domains.

2.2.2 Professions and Conflict

Design problems are quintessentially cross-disciplinary problems that require expert knowledge from more domains than one individual can hope to master. Furthermore, the diversity of the stakeholders involved does not stem from just industrial (division of labour) or scientific (differentiation of science) groups: given the impact of a design project on its surroundings, a social and cultural dimension may well be present. Communication across these professional boundaries implies the integration of a wide range of diverse ontologies with their matching set of values. For example, from a design point of view, the choice of a specific material is maybe extremely positive if it will age well and fit cohesively with the surroundings. Nevertheless, from an engineering point of view, this has the potential to create additional problems that reflect negatively on the project in terms of environmental performance and energy usage. A certain functional distribution—more commercial space, and less housing—is a positive factor for the client, nevertheless has negative implications for the surrounding area. In these simplified binary examples, both parties are right, nevertheless their ontological definitions of value do not match. In the case of the latter example, the designer, not having access to the financial language used by the developer, cannot demonstrate the viability of his solution, and neither can the developer, not mastering the values of design, reach a compromise with the designer.

Further complicating the interaction of a stakeholder group is the fact that their professions—considered here as an abstract system—are not just a positive force in society. Professions exercise control over their respective domains not only out of a selfless need for better standards, quality and precision, but as well from a selfish need to preserve their identity, expand and solidify their claim of expertise over a certain aspect of the material world (Larson, 2017, 1979). Andrew Abbott, in his book, The Systems of Professions (Abbott, 1992) , takes this argument further and argues that professions do not operate independently of each other, but exist in a broader "ecological system". Professions, and even more so professional firms, continuously compete with each other over their ecological niches and seek to enlarge their jurisdictions and desire other domain's skills. This competitive dynamic is visible in the relationship between architects and structural engineers, especially in the case of high-profile projects with a high degree of geometric complexity. Projects such as bridges, airports, football stadiums, etc. require large amounts of ingenuity to both design and build. Subsequently, both the architect and engineer passively claim ownership over the creativity and importance of their roles in the design process by emphasising their contribution. This sometimes leads to heated debate: following a presentation regarding the collaboration process for the V&A Dundee centre between the engineering (Arup) and design team (Kengo Kuma Architects), done by a member of the engineering team (Clark, 2018), the discussion afterwards focused on the actual distribution of creative credit between the two disciplines. The presentation focused on a digitally enabled workflow envisioned by the engineering team which allowed the design team to modify project parameters in such a way that would produce valid engineering analysis and fabrication models, yet still satisfy the architects' need for flexibility. As follows, members of the audience from an architectural background were arguing that it was their profession that was the key behind the project's success and beauty, and that the engineers were just "problem solvers"; this approach of constraining the design team's input was penned as artificially limiting. Needless to say, the opposite was asserted by the members from the engineering disciplines by saying that it was their problem formulation and solutions which made the project possible in the first place.

This leads us to two observations, the first of which reinforces Abbott's ecological model of professions: as seen in the example above, professions seek to expand and defend their jurisdictions, recognition and leadership positions. Engineering expands into architectural design; corollary examples, of architectural design expanding into engineering are also widespread. The second observation is that collaboration between stakeholders facing a design assignment is not a given: the existence of a common problem to solve does not mean the persons involved in its resolution will start from a position of mutual trust. In other words, the disparate ontologies that societal differentiation gave birth to serve as a defensive mechanism to the professional bodies that they belong to. Therefore, creating bridges between them for collaboration purposes—for example, between the engineering and the design team in the example above—can be seen as an offensive act by one party as it can dilute their internal ontological system and set of values. Subsequently, this leads to a deterioration of trust between stakeholders, as opposed to an enhancement: one of the paradoxes of modernity that will be discussed at length in the following section. This aspect has an important bearing on the formalisation of the alternative approaches used to investigate data representation in Chapter 4 (rather than imposing an ontology, allow end-users to evolve their own on a need-by basis), as well as classification in Chapter 5 (allow end-user to curate the data being shared rather than be forced to expose all of it).

2.2.3 Modernity, Transparency and Trust

The increasing specialisation and differentiation of the contemporary context has, in the scope of the design process, a dual nature. While benefiting from the increasing precision of knowledge, detail and quality from each of the individual domains, acts of design show an increasing difficulty in integrating all these domains due to their ontological drift away from each other. To shed light on the systems behind the process of this integration and reveal the underlying mechanisms of differentiation and cross-domain exchanges, this review now turns to Giddens’ institutional analysis of modernity.

In his book, The Consequences of Modernity, Giddens proposes disembedding as the underlying mechanism which allows differentiation, or functional specialisation, to happen (Giddens, 1991). Disembedding is understood as "the lifting out of social relations from local contexts of interaction and their restructuring across indefinite spans of time-space", and he identifies two principal manifestations of it: symbolic tokens and expert systems[1] (Giddens, 1991, p. 22). The former is understood as an interchange medium that can be communicated or transacted without regard to its specific characteristics or context. Giddens gives the example of money as a symbolic token: "today, money proper is independent of the means whereby it is represented, taking the form of pure information" (Giddens, 1991, p. 25). As follows, symbolic tokens embody information that can be trusted to represent a specific fact, action or object across both a temporal dimension as well as a spatial one. In the case of the design process, for example, the architect's building plans are symbolic tokens by which design intent is communicated, as are sections, elevations, three dimensional models, structural specifications and bills of materials: in lieu of "the real thing", they are used to communicate information pertaining to reality. The latter disembedding mechanism, namely that of expert systems, is described as "systems of technical accomplishment or professional expertise that organise large areas of the material and social environment in which we live today" (Giddens, 1991, p. 68). Expert systems provide guarantees of expectations across time and space. For example, architecture is an expert system that guarantees design knowledge and the ability to envision a building; medicine guarantees expert care and treatment in the face of illness; a driver's license guarantees that one can safely operate a car; etc. Summing up, symbolic tokens provide informational, or factual guarantees by which individuals can communicate, whereas expert systems provide guarantees on knowledge and domain expertise.

In a similar manner to Khun, Giddens notes that expert systems develop their own proprietary language, values and tools that are often incomprehensible—opaque—to the outside public. He then argues that the crucial element for the functioning of a modern society that is based on transactions of symbolic tokens and expert systems is that of trust: trust is "confidence in the reliability of a person or system regarding a given set of outcomes or events"; trust is vested "in the correctness of abstract principles and technical knowledge" (Giddens, 1991, p. 88). For example, a person crossing a bridge inadvertently trusts the designers and the builders of the bridge to ensure that it will not collapse; an architect trusts the structural engineer to have correctly specified the building's columns; a plane manufacturer trusts his parts supplier for material integrity of the delivered pieces; a person travelling by plane trusts the plane manufacturer, as well as the governmental bodies that oversee technical repairs, etc. Summing up, according to Giddens, trust, or confidence in abstract capacities, underpins all of modern's society operations and transactions.

As follows, the design process relies on a large amount of trust between the technical and non-technical stakeholders involved in order to be productive and achieve its aims of, for example, delivering a building. In his critical paper, The Tyranny of Light, Tsoukas argues that trust needs to be continuously produced in such a system in order for it to function (Tsoukas, 1997).

It is not just the design process, but all late modern societies' operations that are dependent on trustworthy knowledge for their functioning. In the contemporary context, knowledge, according to both Giddens and Tsoukas, is reduced to pure information, i.e., "objectified, commodified, abstract and decontextualised representations". This serves today's modern informational society’s needs of producing trust; knowledge, once transmuted to information, can be openly communicated and therefore lead to, or legitimise claims of, transparency and, ultimately, trust.

Nevertheless, Tsoukas reveals, this assumes a view of information ("a collection of free standing items”) as being neutral and without room for interpretation; furthermore, its status is that of "an objective, thing-like entity” that “exists independently of human agents" (Tsoukas, 1997). Communication, in this scenario, is coerced into a purely technical model—as originally defined by Shannon and Weaver (1948)—that dehumanises it. In turn, this gives birth to one of the major problems in generating trust and understanding between persons and domains of knowledge: by stripping away the inferential dimension of communication, namely the human act of interpretation, information is generated in a way that stops being able to represent reality in a meaningful way; it diminishes one’s capacity for understanding and stops being useful as a transactional entity between stakeholders. For example, a building cannot be reduced to the sum of its parts. By deconstructing a building into its objective, quantifiable composing elements, namely walls, columns, windows, floor slabs, dimensions and proportions, etc., its informational representation will inadvertently lose aspects that the system cannot easily measure. These can be, for example, the building's historical and cultural value, the way it engages with its surroundings, its personal value for its inhabitants or users, its symbolic value as a landmark, etc. This is equally valid for any act of communication, regardless of what it is designed to transmit: if a system would be designed to just measure the cultural aspects of a specific building, it would miss its potentially life-endangering engineering and structural flaws. Consequently, communication and information have a very strong, often overlooked, political dimension: information exists and is created for a purpose by an author, and if this intention is not easily inferred or made explicit, more information will have the effect of reducing transparency rather than increasing it (Tsoukas, 1997).

The ideal of transparency based on objectified knowledge hinders the building of trust between stakeholders due to the separation of information and intent, or purpose (Tsoukas, 1997). Furthermore, this dynamic undermines the trust that is necessary for expert systems to function correctly: because information is decontextualised, it is required that it is thereafter placed into a context where it can be interpreted. Nevertheless, these contexts differ and thus lead to different and potentially conflicting interpretations. Therefore, Tsoukas argues, trust is less likely to be achieved when more information on an expert system's inner workings is made available. A simplified anecdotal example can be formulated as an interaction between an architect and a developer: based on initial design input, the developer creates a financial summary that he finds is too expensive. He then demands a different solution from the architect, who then, due to lack of trust, questions the financial calculations of the developer and attempts to provide his own, in a way that would justify his design. Not being fully aware of the internal model of costing that the developer uses, the architect's version falls short when evaluated by the developer. Thereafter, the developer, goes on to request specific design changes as his trust in the architect's design capabilities (projected from his suspicion on the inability to correctly cost the project) is presently reduced. Needless to say, this cycle repeats itself several times, increasing transparency. Nevertheless, in most cases, due to the unresolvable ontological differences between the architect's point of view and that of the developer, it does not reach a successful outcome. This spiralling effect of mistrust—the more transparent an expert system becomes, the less it will be trusted; the less trusted an expert system is, the greater the calls for transparency—actively undermines communication and the effective interaction of practitioners from various domains.

Summing up, so far, the theories of Kuhn, Abbott, Giddens and Tsoukas have been presented in an attempt to sketch a higher level theoretical framework of the mechanisms and dynamics underpinning the rich interactions between actors. In doing so, it is revealed that "differentiation"—the process by which new disciplines and their representatives emerge—is (a) a product of both industrial and manufacturing needs, through "division of labour" as theorised by Adam Smith, Kant, Durkheim and others, as well as (b) scientific speciation as described by Thomas Kuhn. Both differentiation mechanisms come with two very similar caveats, namely the alienation of workers in the case of the division of labour (Marx) and the increasing difficulty of a specialised scientific domain to interact with others outside it (Kuhn) which are echoed by Tsoukas and Giddens. Subsequently, Giddens' analysis of modern society explains the dynamic of societal differentiation and their interaction through two related concepts. The first, expert systems, describes how large areas of the material world are organised and shaped by specialised groups of knowledge and expertise with proprietary systems of value and categorisation. The second, symbolic tokens, explains how these systems communicate and exchange information. For both of these systems to function correctly and interact with each other, the former requires trust to be vested in abstract capacities, whereas in the case of the latter, trust is vested in abstract information. Consequently, trust becomes a central resource for the operation and interaction of differentiated and specialised groups of disciplines and their represented stakeholders. As such, the friction from within the design process can be explained as a lack of trust. Tsoukas argues that this is due to the fact that modern society is based on a technical model of communication, one in which information is dehumanised and stripped of its social and political dimension. Information, once decontextualised, needs to be re-embedded in order to be interpreted. When crossing domains of specialisation, Tsoukas argues, information is interpreted in a different ontological framework and thus creates conflicting interpretations through which the trust necessary for fruitful interactions is undermined.

2.3 Design & Convergence

Specialisation constructs a productive environment in which specific phenomena may be studied in more depth and thus contribute to a greater understanding of the surrounding world. Where this dynamic of the modern society can be described as a divergent process, design is the opposite: the act of creating a building—or shaping any small part of the built environment—necessitates the coordination of a large and diverse group of professions and stakeholders, or in other words, the convergence and collaboration of multiple expert systems. Surprisingly, the same linguistic and psychological mechanisms that underpin the creation of meaning—metaphors and analogies—play both a divergent role by supporting modern society’s differentiation and specialisation, as well as a convergent one by enabling a shared understanding between different ontologies.

2.3.1 Metaphors and Conceptual Displacement

Metaphors play a key role in the emergence of new theories and domains, in science and elsewhere. Building on Thomas Kuhn’s contemporary work on the dynamics of scientific revolution, Donald Schön argues that metaphors and analogies, when used in a projective way (as opposed to purely descriptive), lead to a process of conceptual displacement (Schön, 2011). Similarly, Lakoff and Johnson argue that conceptual metaphors—the usage of one idea in the terms of another—are used by people to understand abstract concepts (1980).

Metaphors transcend their purely ornamental role and become the main vehicle for the generation of new knowledge and, as such, underpin the linguistic and psychological process of creativity; conceptual displacement can be seen as a process by which old concepts or theories are fit to new situations. As follows, Schön argues that this is an action of transmutation of ideas and concepts, and not just a purely superficial transformation. Old and new concepts are simultaneously defined through a sequence of transposition, interpretation and correction (Schön, 2011, p. 57). Subsequently, two functions of the metaphor emerge: a radical one, which lies at the base of new knowledge, and a conservative one, which explain how old theories underlie new ones[2]. The former, radical function, can be illustrated by looking at the “sleeping metaphors” (Schön, 2011, p. 79) present in scientific language, e.g. “atomic wind”, “biological transducer”, “computer memory”. These phrases are indicative of concepts that have been carried over and act as projective models from one discipline to another. The later, conservative function of metaphors, is visible by fact that “Greek theories of scale, of atomic processes, and of vision […] have managed to preserve themselves virtually intact” until today (Schön, 2011, p. 139).

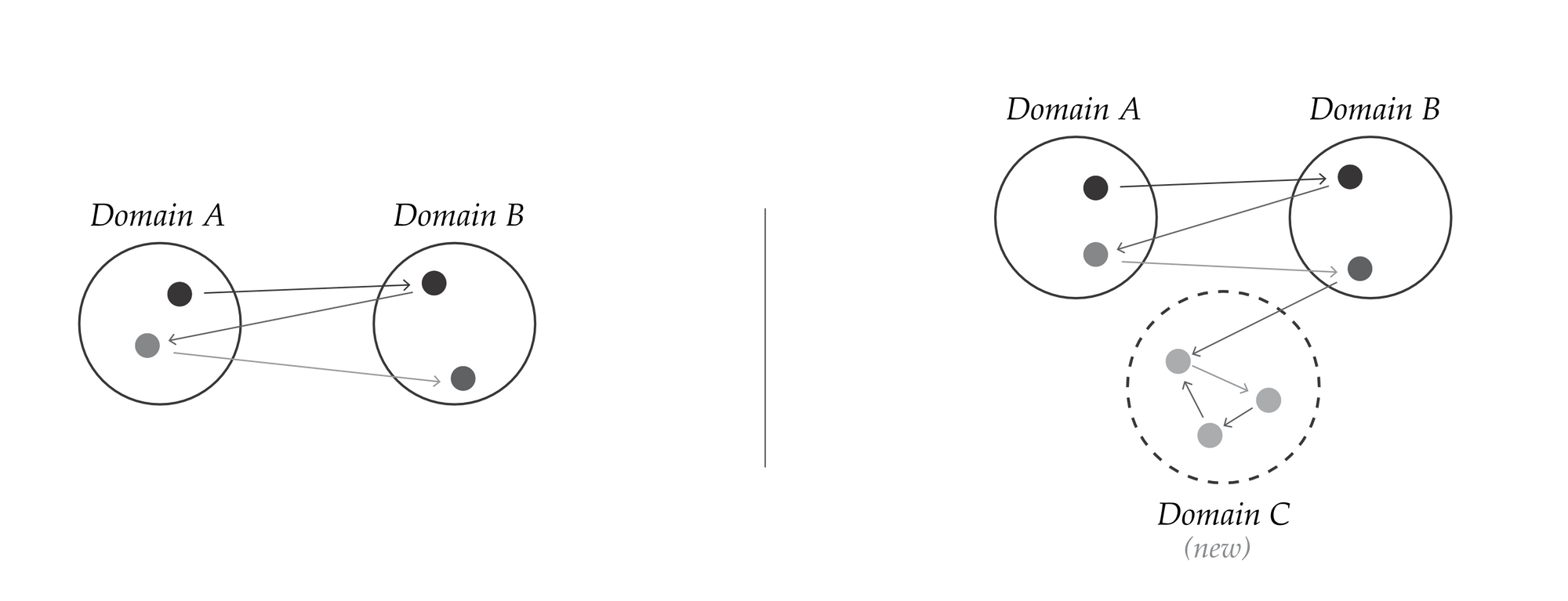

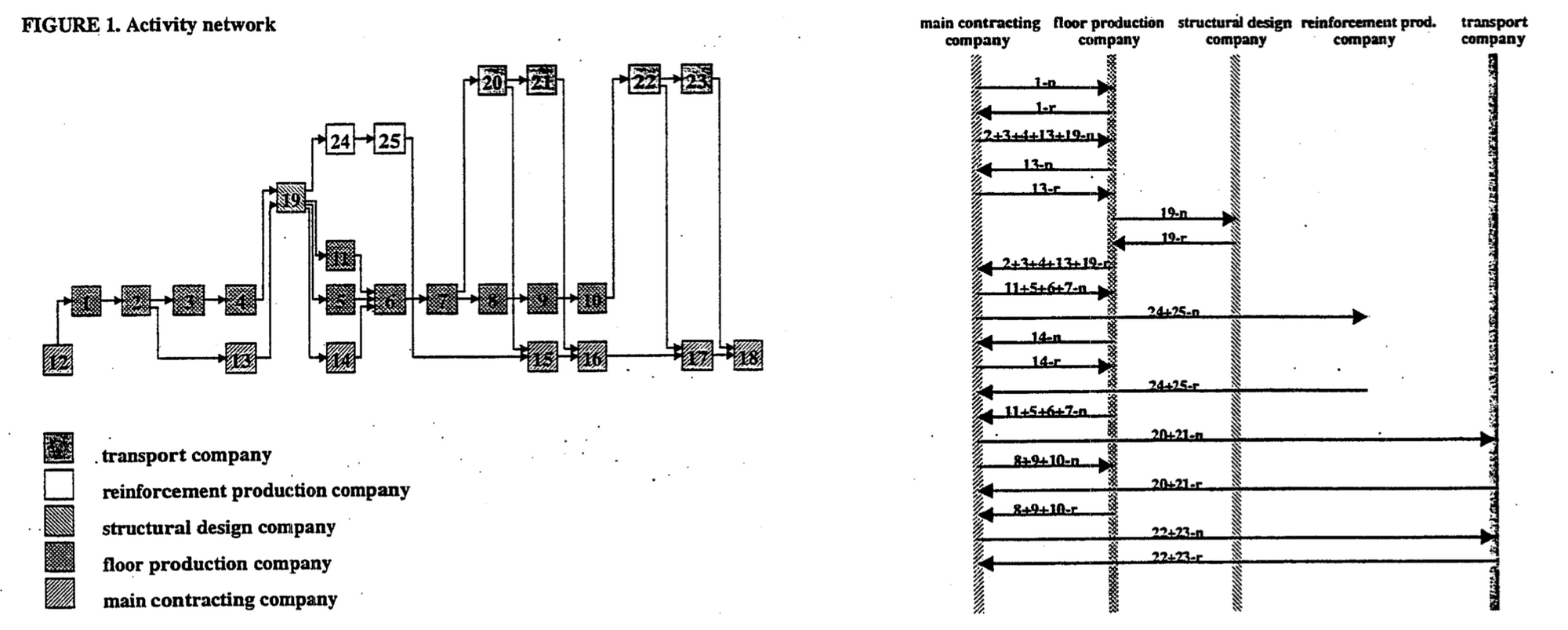

Figure 1: Asymmetric reflections of meaning (left); The emergence of a new domain (right).

Sergio Sismondo, in his analysis of metaphorical thinking in scientific theories, argues that science itself is impossible without it "because metaphors are needed to provide the cognitive resources to explore new domains” (Sismondo, 1997, p. 127). In his view, metaphors take structure from one domain and apply it to another (Sismondo, 1997, p. 137). This act of conceptual reorganisation has a performative aspect by which a certain structure can be forced on a subject: “metaphors change some of the structure of associations of the vehicle, not just the tenor[3]” (Sismondo, 1997, p. 141). He sums up by asserting that metaphors are asymmetrical descriptors that reflect concepts from one field to another, and by doing so, create new meanings and shared values. This process is illustrated above, in Figure 1.

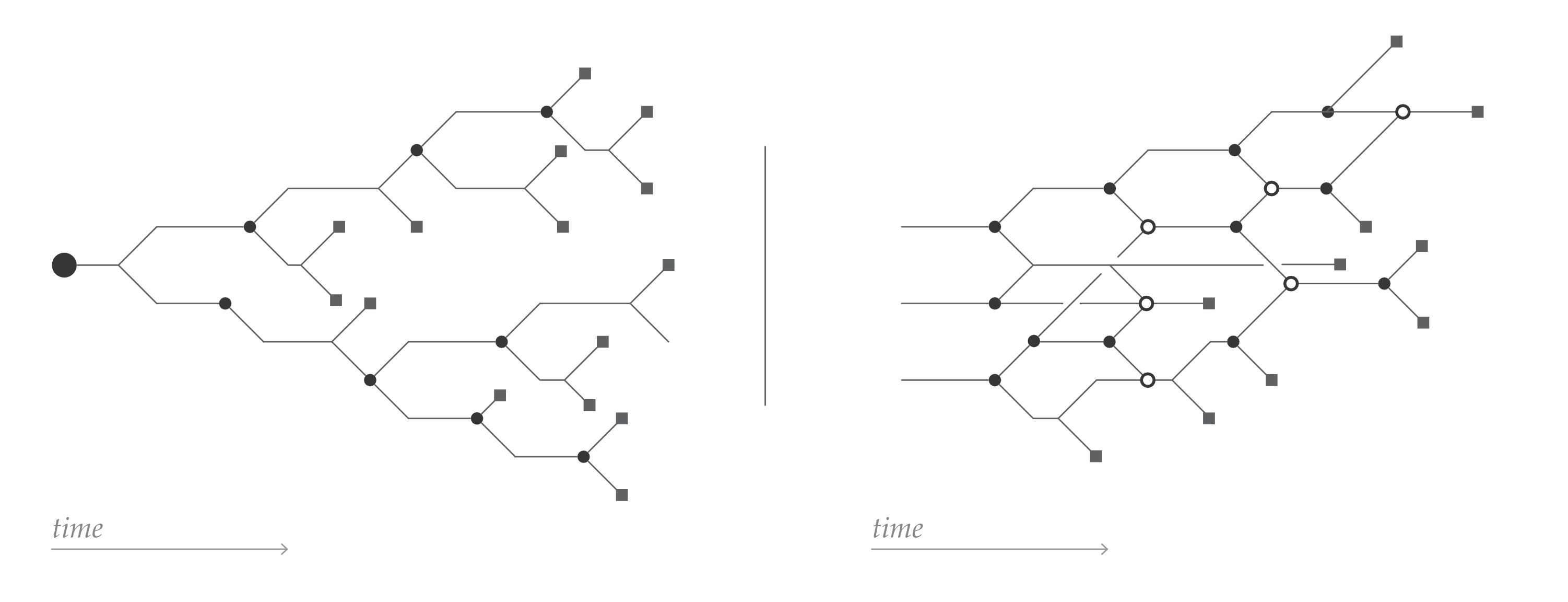

Figure 2: Ecological phylogeny displaying ever increasing branching (left) vs. Cultural phylogeny showing both branching and merging (right).

Thus, Schön's conceptual displacement and Sismondo's asymmetric descriptors envision a dynamic process of divergence and speciation of new domains and their separate ontologies. Nevertheless, this process can be seen also from the perspective of the newly formed expert system—professional, scientific, or industrial specialisation—as not one of speciation, but as one of convergence by which the interaction between two different domains, having been productive, gave birth to a new, internally coherent ontology. In some cases, the original domains continue to exist independently of each other, with the new domain claiming its own identity. In other cases, the precursors may partially have folded in the new specialisation or ceased to exist. Kuhn noted that specialisation tends to widen the gulf between domains, and results in a communication problem between separate bodies of knowledge—ontologies are not necessarily interchangeable or, if evolving for a long time, legible by persons outside the respective field. Notwithstanding, it is important to assert that the process of differentiation is countered by convergence, and that both are based on the same linguistic and psychological mechanisms. Therefore, modern society is not described by biological phylogeny—an ever-branching tree of species and subspecies, but by one of cultural phylogeny, with the main difference being that domains do not speciate ad infinitum, but also merge into strands that may preserve the identity of the predecessors in a latticed pattern (Figure 2).

Subsequently, a design problem acts as a convergent point in this network of knowledge strands, attracting many domains and their representative stakeholders due to its complexity. Nevertheless, this network is not fully prescribed by the problem at the beginning, because, as shall be seen, the problem itself is not yet fully defined. In what follows, this investigation will look at how the mechanisms of differentiation and convergence, up to now described from a theoretical perspective, perform and affect the interactions of stakeholders in an applied design context.

2.3.2 Wicked Problems

Horst Rittel, together with Melvin Webber, changed the field of design by linking it with politics. They achieved this by studying planning (and design) problems from the point of view of a pluralist society that is dominated by complex and potentially confrontational interactions between stakeholders. In their paper, Dilemmas in a General Theory of Planning, Rittel and Webber distinguish between "benign" or "tame" problems and "wicked" problems (Rittel and Webber, 1973). Tame problems are well defined problems and have findable solutions that may easily be evaluated on a quantifiable scale. For example, a mathematical problem can be considered tame—it has a verifiable solution and one can know when the problem is solved; similarly, the sizing of a specific column based upon the load it carries, or defining the number of sanitary spaces needed based on building occupancy. On the other hand, wicked problems are problems that stem from the inner tension embedded in the contemporary modern society and its accompanying paradoxes: they are complex assignments that cannot be solved through a linear approach[4] in the manner of tame problems.

One of the defining characteristics of a wicked problem is that its understanding is directly dependent on its proposed solution: “the information needed to understand the problem depends on one’s idea of solving it” (Rittel and Webber, 1973). Following this, wicked problems do not have a definitive stopping rule: each proposed solution generates a new formulation of the problem, thus leading to a new solution, ad infinitum. Rittel and Webber affirm that wicked problems are never solved because an optimal solution is found, but rather the process of solving them is halted due to external considerations, like running out of time, money or patience. In the case of the conflicting discussion regarding the jurisdiction of the architect’s and that of the engineer’s professions, when analysing the design process as a wicked problem, the success (or failure) of a project is determined by the iterative act of defining and solving the problem. For example, a virtuous cycle can be seen as (1) the architect defines the problem by proposing a design solution, (2) subsequently the engineers solves it, but in so doing contributes to a greater understanding of the problem, therefore (3) the architect redefines the problem by proposing a new design solution, at which point (4) the engineer comes up with a refined structural solution, and so on until a satisfactory solution is reached. As such, design solutions can be said to emerge gradually “as a product of incessant judgement subjected to critical argument" (Rittel and Webber, 1973) that has the effect of slowly evolving a shared set of values between the stakeholders involved.

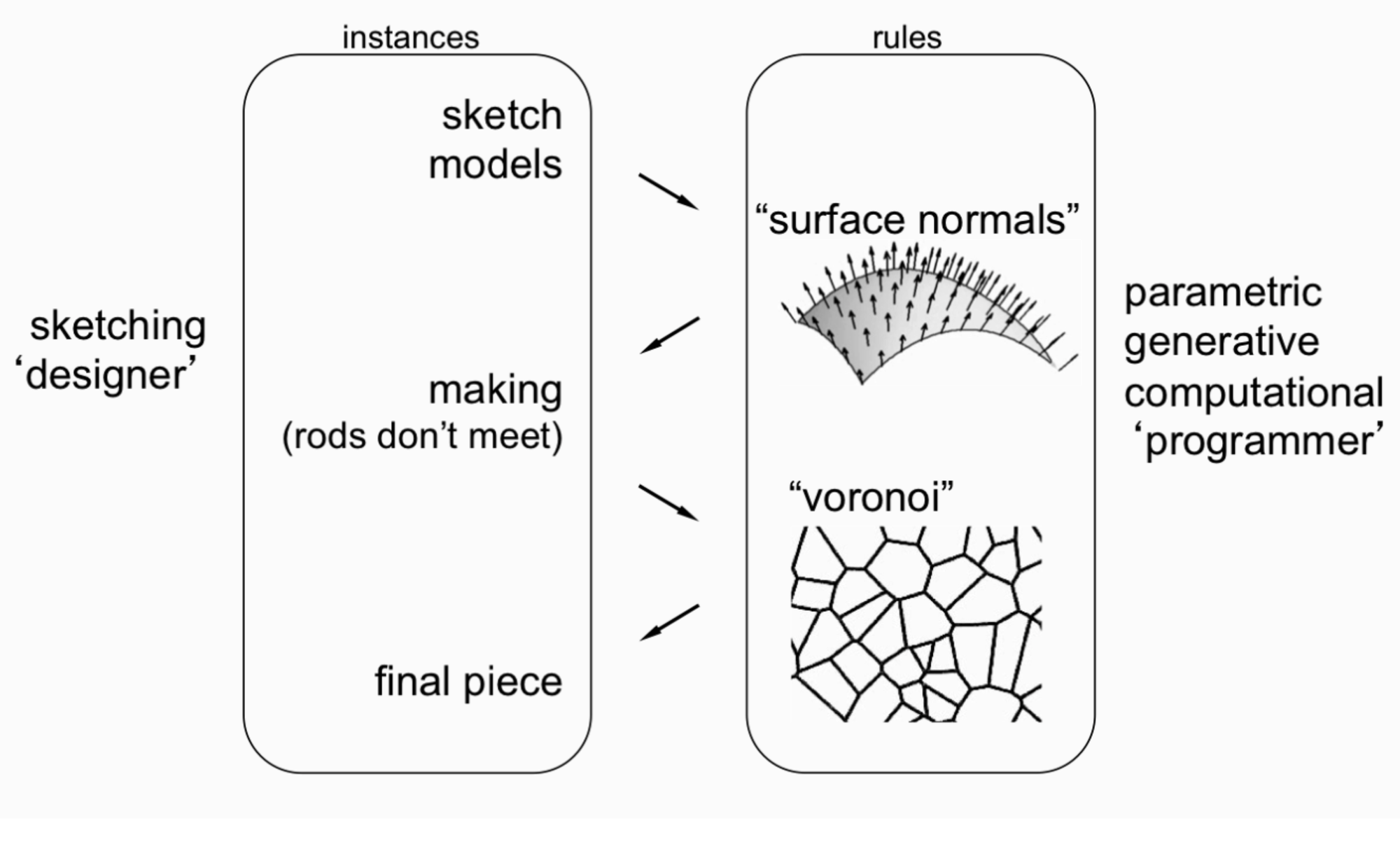

Figure 3: "Design alternates between figure and representation" (Hanna, 2014).

Nevertheless, Rittel and Weber note that “diverse values are held by different groups of individuals—that what satisfies one may be abhorrent to another, that what comprises problem-solution for one is problem-generation for another” (Rittel and Webber, 1973). As observed by both Kuhn and Tsoukas, the differentiated and specialised modern society does not have one shared ontology; this needs to be constructed on a problem by problem basis between the interactional network of stakeholders that participate in its solving. This status of ontological uncertainty is, according to Hanna, an inherent feature of the creative design process. He describes it as an incertitude of how the “design problem or situation can be represented or modelled in the first place” (Hanna, 2014). As an example, he describes the process undertaken for the fabrication of a series of sculptures by Anthony Gormley during which the “design progressed by virtue of repeated changes in abstract representation” (Figure 3). These repeated alterations crossed the boundaries between several abstract and material domains, and each crossing redefined, or enriched, the problem definition. As follows, the design process contains more variables than can be represented in a finite model (Schön, 1991) or known from the onset, thus actions taken within it tend to produce consequences that cannot be predicted: an attempt to solve a problem will actually modify or change its representation, or definition.

2.3.3 Solving Wicked Problems

The act of ontological revision that underpins creative design, depending on the scale or breadth of the issue addressed, is performed either by one sole practitioner or within a stakeholder network. If the problem is within the remit of a single discipline, for example architecture, the process benefits from an internally coherent ontology, or at least a set of matching or relatable concepts. But when the scope of the problem expands as such that more disciplines are involved—as is usual with most design problems—new dynamics of interpersonal and inter-professional communication come into play, and the problem being addressed becomes even more wicked.

Problem wickedness, together with social and technical complexity comprise the three main categories of forces that tend to fragment a project or “pull it apart”, according to Jeff Conklin (2005). Whereas they could be seen as symptoms of wickedness, he draws a distinction between them: “wickedness is described as a property of the problem/solution space and the cognitive dynamics of exploring that space, social complexity is a problem of the social network that is engaging with the problem” (ibid, p. 12). The wickedness of a problem is dangerous if the problem is misidentified as being “tame” or it is tamed in an unproductive way. Social complexity, on the other hand, arises from the structural relationships between stakeholders and often takes the form of “cultural wars” between departments or specialisations. Finally, technical complexity is potentially a fragmenting force, but not always. It arises from the large amounts of available technological solutions for a given problem, as well as the unintended side-effects of their interaction which cannot be fully predicted.

Conklin proposes that, in order for design problems to be resolved, organisations and the groups involved must act in such a way that demonstrates collective intelligence, an emergent form of collaboration that amplifies knowledge and expertise from one domain when it interacts with another—as opposed to, in other words, simple collaboration. Seen within this framework, simple collaboration can be considered as purely zero-sum game of informational exchange between parties that does not lead to new insights. Collective intelligence, on the other hand, is seen as a phenomenon in which “the whole is greater than the sum of the parts”.

It is therefore necessary that there is a sense of shared understanding as well as shared commitment in between the stakeholders involved in process. These two, when present simultaneously, amount to coherence, which according to Conklin, is “the antidote for fragmentation”. Defragmenting a project, as such, becomes an act of ensuring the existence of shared understanding of the project’s background and issues, of a shared commitment towards the project’s objectives and measures of success, and most importantly, of shared meaning for key terms and concepts amongst the stakeholders involved in the process (Bechky, 2003; Conklin, 2005; Lewicki et al., 1998; Walz et al., 1993).

In a manufacturing context, Bechky’s study on creating shared understanding reveals the importance of embedding the communication between the various stakeholders in their respective work place contexts (Bechky, 2003). According to Bechky, understanding is “situational, cultural and contextual” therefore its creation process cannot rely on simple knowledge transfer. Echoing Giddens’ and Tsoukas’ concerns regarding disembodied (decontextualized) information, Bechky argues that communication problems arise between members of different groups due to a lack of common ground that leads to information being interpreted in different ways—reflecting the context in which it is received. Nevertheless, she illustrates through a case study in the manufacturing of processor units that, if members of these different groups start providing solutions (or defining the problems) based on the differences between their work contexts, a shared set of common meanings and terms arise that contribute to a richer understanding of the problem being faced. This is achieved sequentially, first through a set of transformative actions by which one stakeholder tries to conceptualise the knowledge of another within the context of his work. Gradually, these transformative actions accumulate and start shaping a common ground between the stakeholders (Bechky, 2003, p.234).

For example, the interaction between an architect and structural engineer involves many exchanges of information. Starting from one instance of a design, a structural solution is proposed. Nevertheless, this is not satisfactory for the architect: imagine, for example, a large overhanging body has the (previously unknown) effect of requiring an extra set of columns that are visible on the ground floor. This leads the architect to redefine the design with a much smaller overhang but a larger ground floor space as well, in order to hide, or incorporate the new columns. The structural engineer comes back with a revised solution that, given the smaller dimensions of the overhang, does not necessitate the columns that were initially the source of the architect’s irks. In an ideal scenario, the architect would meet with the structural engineer and contextualise their values in each other’s domain: the architect would soon gain a knowledge that overhangs above a certain length will require more supporting columns which potentially ruin the outside space along the building; the structural engineer would understand that a free sheltered public space is an important feature for the building in the eyes of the architect, and thus be able to inform his proposed solutions with this in mind. Essentially, “by exposing members of different communities [domains] to the perspectives and work of the others, these interactions reduce the differences in the understanding of the groups” (Bechky, 2003, p.236) and create a set of relationships between the ontologies involved in the project’s resolution.

It is important to note that the communication and collaboration context does not need to be fully cooperative—or the stakeholders do not need to trust each other blindly—for it to operate successfully and allow for “collective intelligence” to emerge. Based on an analysis of communication inside a software practice, Walz et al. (1993) show that conflict facilities the learning process that leads to shared understanding. In scripted social dialogue, conflict usually takes the form of either someone playing the role of the devil’s advocate, helping the decision maker test his assumptions, or as a dialectic scenario in which two sides exist that pit thesis and antithesis together. The latter approach allows, according to Walz et al., to generate the necessary information for decision making and clarify situations where there is more than way to define a problem (ibid.). Underpinning this view of productive conflict is Lewicki’s later research which shows that trust and distrust do not exclude each other and that their interaction can be productive (Lewicki et al., 1998). This is based on the finding that trust and distrust are not polar opposites of the same scale, but they are “functional equivalents” (ibid.) . Both trust and distrust function to reduce the complexity of a given problem (social or technical) by assuming beneficial outcomes in the case of the former and injurious ones in the case of the latter. Therefore, a state of ambivalence—the coexistence of trust and distrust—is a key driver in the dynamics of resolving or defragmenting wicked problems and, to a certain extent, sets the stage for Conklin’s state of coherence.

According to Weber and Khademian (Weber and Khademian, 2008), networks are seen as the best way to govern and manage wicked problems. They are defined by enduring exchange relationships between organisations, people and groups of people. Public and private governing structures have evolved from a hierarchical model of organisation to a networked one, as the latter demonstrates several advantages over the former when it comes to accomplishing complex tasks. Networks can share scarce resources and achieve collective goals better; they demonstrate flexibility, efficiency; they can accumulate vital resources and power needed to carry out shared tasks; etc. Most importantly, networks “allow participants to accomplish something collectively that could not be accomplished individually” (ibid.). Further reinforcing this view, from an analytical angle, is Watts and Strogatz’s famous analysis of small world networks. They conclude that “dynamical systems with small-world coupling”—i.e., semi-random networks not unlike a group of people involved in the design process—show evidence of “enhanced signal-propagation speed, computational power, and syncronizability” (Watts and Strogatz, 1998). The exchange relationships underlying a network—regardless of its goal—are, essentially, informational flows. From this point of view, Weber stresses the importance of transactional requirements of such communication—i.e., not just the information itself, but how it is transmitted and what is done with it. He describes effective networks as those being able to successfully transfer knowledge, ensure and communicate its receipt and comprehension, as well as integrate it into new knowledge. As will be discussed later on in this chapter, Weber’s analysis, coming from an administrative and management angle, describes a vital ingredient of an inferential communication model, namely a system of evaluating receipt and comprehension of informational exchanges.

Where Weber describes these networks as mainly composed of human actors, Dawes et al. describe them as socio-technical systems in which human, organisations and institutions coexist “in a mutually influential relationship with processes, practices, software and other information technologies” (Dawes et al., 2009). He goes on to argue that, for such networks to be effective, they exhibit a culture in which the sender of information, by default, questions the relevancy of the information he produces, and, if it has even a remote chance of it being so, he or she will share it: “a need to share network culture” (ibid.). Given the symbiotic relationship between technology and its application, Dawes warns that approaching networks from purely an IT perspective is problematic. Networks undergo a constant evolution; nevertheless, established patterns of exchange do emerge. As such, in order to provide for this process of definition, development processes of early formed stakeholder groups “need to emphasise early, open dialogue and examination of assumptions and expectations”—thus supporting Lewicki’s claim that an ambivalent state of trust and distrust is productive, and similarly Walz’s notion of scripted conflict.

Summing up, it is important to note that shared understanding amongst a varied group of stakeholders does not entail the notion of a shared ontology. While the latter scenario would be ideal, it is an unreachable goal—as Roberts (2000) puts it, “getting the whole system in one room” is an impossibility. This is because the definition of the “system” itself is in constant flux, and thus cannot be contained in a “room”. The socio-technical network that aggregates around a wicked problem is continuously evolving as people, ideas, software, and other actors (both human and non-human) continuously influence each other and their collective interpretation of the problem. The connections between a network’s actors, as well as the actual actors themselves, change and mutate in time. As follows, coherence within this context can only be achieved by challenging and questioning information and knowledge continuously through dialogue. By doing so, a certain interdependence is created amongst key concepts from different ontologically different groups. In some exceptional cases, these can crystallise in a new discipline or profession, but in most, day-to-day wicked problem design assignments, simply understanding the trade-offs involved between one group’s values and another’s is enough to engender a productive cycle of define-solve iterations required to manage or resolve wicked problems.

2.4 Design and Communication

Design problems necessitate a large and diverse number of stakeholders to participate in their resolution, thus making the above normative approaches extremely relevant for tackling communication issues in a design process. The RIBA Design Management Guide describes the contemporary context as an intense and pressured environment due to the increasing complexity in managing the production, coordination and integration of design in projects (Sinclair, 2014). This is due to conflicting situations emerging from increasing accuracy demands in timing and costing as well as managing design input throughout both time and areas of expertise, like the various contractors and sub-contractors involved in the building process as well as other specialist groups. It can be said that today, “an examination of professional diaries is likely to show that most architects spend more time interacting with other specialist consultants and with fellow architects, than working in isolation” (Lawson, 2005, p. 239). Subsequently, the following section, by building on literature that tackles specifically how design professions cooperate and work together, will attempt to reveal the causes behind the friction in the communication process.

In The Reflective Practitioner, Donald Schön analyses how disciplines and individuals interact with a given problem and build both personal experience as well as domain wide useful knowledge (Schön, 1991). In a chapter dedicated to architecture, describes the design as a “reflective conversation with the situation” (ibid., p. 76). In his analysis of the design process of an architecture student, supervised by her tutor, he reveals a certain protocol that unfolds in sequential moves. Each is a “local experiment which contributes to the global experiment of reframing the problem” (ibid., p. 94), and thus represents a dialogue with the situation itself. An action in one direction or the other reveals certain aspects of the problem—positive or negative—which in turn, by contributing to the architect’s understanding of the problem, help in the planning of the next move. This communicative act between the formulation of a design problem and its solution is the main task of the designer. Because it happens internally—either within the designer’s own thought process, or within the discipline itself—Schön calls this process an act of reflection in which information and knowledge is actively reflected between the surface of a problem definition and its potential suggested solution.

Further supporting this argument is Lawson’s assertion that designers negotiate between “the problem and the solution view”, where the former is understood as “needs, desires, wishes and requirements” and the latter is described in terms of “material, forms, systems and components” (Lawson, 2005, p. 272). Lawson describes the design process as a conversation that aims to reconcile conflict between these two views, during which it is not just the solution—or design—that evolves, but as well the problem definition. This is a co-evolution model of the design process that explains it as “a series of solution states each evolving from the previous one, in parallel to a series of problem states again each evolving from the previous one”(Lawson, 2005, p. 272; Maher and Poon, 1996).

Nevertheless, the effectiveness of this process has limitations. The need for interaction with external stakeholders—actors that come from another discipline, or simply people that vested interests in a problem by virtue of the context they are in—means that internal decisions must be publicly justified and introduces a political element to the exchange. The process of internal reflection described previously does not lend itself easily to such an act of making it transparent, even in a curated and carefully chosen way. In his analysis of the discipline of planning, Schön describes the interaction between an urban planner and a developer during the initial phases of an ultimately abortive potential project. Both parties were engaging in a process of internal “conversations with the situation” but instead of choosing to transparently reveal information, they chose to steer the situation in a managed way and “withheld negative information, tested assumptions privately, and sought to maintain unilateral control over the other” (Schön, 1991, p. 269). In Schön’s example, this led to both parties making wrong assumptions regarding each other’s interests, and the situation ultimately evolved into the developer’s decision to not go forward with the redevelopment plan. This protectionist attitude towards one’s private resources of knowledge is further supported by Lawson’s affirmation that “many designers seem to widen rather than bridge the gap between themselves and others” (Lawson, 2005, p. 234). Going beyond the popular perceptions of a designer as a creative genius, at a professional level this behaviour can be ascribed to the competing nature of expert systems or disciplines and, equally important, to the need to avoid Tsoukas’ paradox of transparency mentioned earlier in this chapter: too much transparency, by allowing information and knowledge to be interpreted in a different context than that in which it was created, allows for diverging and often conflicting interpretations and thus undermines the trust it was intended to build.

Nevertheless, reinforcing Giddens’ thoughts on modern society, Schön asserts that trust is exactly what is needed for such a reflective approach to work across individuals, disciplines or professions. This can be partly achieved by full disclosure of information, as it produces a climate in which parties are more disposed to understand each other’s point of view (Schön, 1991, p. 352). Furthermore, actors involved in the design process should engage with each other in a reflective contract—one in which both parties renounce attempts at ”win/lose games of control” (Schön, 1991, p. 303), and openly admit uncertainty (the basis for the process of reflection-in-action) without misinterpreting it as weakness or incompetence. In short, there is a growing need for cooperative inquiry within the (potential) adversarial context of the design process: ontological revision, or conceptual displacement, if done publicly and openly, can be often stigmatised or have adverse effects if taken advantage of.

How does this map onto the broader landscape of AEC? The RIBA Design Management guide, mentioned earlier in the introduction of this section, underlines the importance of the project manager as a communicator and enabler of dialogue throughout the stages of the design process. Nevertheless, when it comes to information exchanges, the suggested approach becomes very specific outlining “what [information] should be produced and, more specifically, when it should be produced, in support of a collaborative approach” (Sinclair, 2014, p. 3). While acknowledging that each project is unique, and therefore have different requirements in terms of data and collaboration flows based on project specifics, constraints and client objectives, there is a very clear emphasis of exerting control over the whole process through “rigorous application of standards and protocols” (Fairhead, 2015, p. 89). Ironically, exerting control inhibits the evolution of the stakeholder network surrounding a design problem and, to a certain extent, discourages the acceptance of uncertainty as an integral part of design. Consequently, while the assumption that communication will and should happen in order to achieve shared understanding and facilitate the management of a wicked design problem can be made, contrary to current trends in AEC, the assumption on how it will happen, and amongst whom cannot be asserted: the design process does not have a fixed topology and constituency, but—as mentioned in the previous section—is a socio-technical system in constant flux, even throughout the duration of a single project.

There has been a wide range of studies, reports and research projects coming from construction regarding collaboration and communication. Andrew Dainty’s analysis, by informing theoretical aspects of communication and management with the realities of practice, describes a rich and problematic context. Chief among the challenges identified is that of the fact that the AEC industry operates on a project basis (Dainty et al., 2006, p. 21). In his book, Communication in Construction, a project is defined as “an endeavour in which human, financial and material resources are organised in a novel way to undertake a unique scope of work” (ibid., p. 34). Projects, within the AEC world, present a different set of problems than in other industries, such as car manufacturing or industrial design. Specifically, every construction project (and, by extension, design project) is unique, essentially executed only once, with no prior prototyping session that can test assumptions and validate ideas; a building is only built once. To put it differently, a project is a unique combination of client objectives and budget, site constraints, technological solutions, design and stakeholders that collaborate towards its enablement. A further observation is, according to Dainty et al., the fact that “barriers to effective communication are complex and multifarious because of the number of actors which govern the success of the construction processes” (Dainty et al., 2006, p. 18). These actors, given the temporal and finite nature of projects, will collaborate only for short periods of time leading, in turn, to barriers in standardisation and formulation of specific protocols, as each project will tend to build its own network of informal communications. Nevertheless, this is not necessarily a negative aspect: Dainty points out the Building Industry Communications Research Project report from 1966, which suggests that informal systems and procedures “seem to produce more realistic phasing of decisions and more realistic flexibility in the face of what is described as the inevitable uncertainties in the construction process” (ibid., 2006, p. 33).

The Building Industry Communications Research Project (BICRP) was a major study in the UK undertaken by the Tavistock Institute of Human Relations that explored the coordination problems of the construction industry. It ran from 1963 to 1966 and, as Alan Wild describes, during its progress, it recorded an evolutionary understanding and definition of the problem of communication in construction (Wild, 2004). It describes the design and build process as being underpinned by a “network of technical and social relationships”. Initially, the design phase of a project was seen as more complex than construction: “post-war evolution of techniques and material changes in the technical, economic and social context and methods of finance had generated a mismatch of roles”. Construction was seen as having “a sequence of operations […] capable of being foreseen with reasonable accuracy” (ibid., 2004). Nevertheless, this understanding changed during the course of the project. Interestingly, Wild reveals that based on an assessment of the “Briefing and Design” stage, the researchers “dropped their assumption of relative stability in construction” and essentially accepted that there is no comprehensive model. In 1965, the steering committee of the BICRP defined a more abstract view of the building process, which invalidated the self-containment of the building to process. A difference was made between the “directive” functions, or stages, of a project—briefing, designing, costing and construction[5]—and the adaptive functions. Where the normative design stages were fixed, the adaptive ones were unbounded and included “variations, post-contract drawings, error and crisis exploitation, re-design, on-site design” (Wild, 2004). The obvious differences between the normative approaches and the informal ones led to the call for the integration of sociology and organisational research within BICRP in order to study the “psycho-social” system that was now emerging as the dominant problem definition model with the group (ibid.). Unfortunately, the study stopped at this stage due to lack of funding, and due to a lack of consensus in what context to carry it on, it was never continued.

Dainty et al. caution against applying a too simplistic model of communication when studying such a phenomenon; to a certain extent, assuming that construction was composed of a predictable sequence of operations led to the BICRP’s turbulent evolution. It is argued that the analogy between a technical (or, as it is otherwise known, code) model of communication and human communication “belies the complex melee of factors that shape and impact on the way in which messages are transferred from the transmitter to the receiver” and “ignores the physical and social context in which people work” (Dainty et al., 2006, p. 56). Reinforcing both Schön’s call for an environment in which collaborative inquiry can coexist within a potentially adversarial context, as well as the previous body of literature from the study of wicked problems, communication is seen as route to trust and mutual understanding that cannot be explicitly reduced to a series of norms (ibid., p. 230) without losing its emergent properties. The questioning of which model is best suited to study the communication flows of the AEC industries is a recurrent avenue of research. Similarly to Dainty et al., Emmit and Gorse challenge as well the popular view of communication as a purely technical act of sending and receiving information. They state that different models may be more or less appropriate for different aspects of the process (Emmitt and Gorse, 2003, p. 33), and counterpose to the simplicity of Shannon-Weaver’s theory of communication a set of other configurations that take into account the peculiarities of the AEC industry.

Within this context, digital technologies are often discussed as potential positive force that can enable a more efficient flow of information, nevertheless Dainty cautions that their usage easily undermines “the tenets of effective human communication and interaction” (Dainty et al., 2006, p. 39). Furthermore, digital technologies “can inhibit explanation of meaning between project participants” (Dainty et al., 2006, p. 118). Essentially, the digital medium imposes, due its nature, a certain technical paradigm on the process. As the purview of this research project is intimately tied with digital design communication, it is of importance to understand what different communication models actually entail outside the rigours of a code-based environment and try and extract a set of guiding principles by which, within the last section of this chapter, the contemporary applications of digital design communication in AEC will be assessed.

2.4.1 Communication Models

Different communication models can be applied to different parts of the communication processes within the AEC industries. The goal of the following section is not to come up with a model that fits digital communication within the AEC industries, or a tailored definition of the term itself. As the previous sections outline, successful or effective communication in the context of a wicked problem— be it related to design, construction, planning, or any other domain—is essentially a psycho-social process that is partly enabled by technology. In part, its effectiveness depends on unquantifiable human factors and personality traits that cannot be necessarily fully quantified, or even qualified. In another part, it also depends on the applicability and efficiency of formalised—one could say managerial—processes, like the RIBA Plan of Work, that give it structure. Notwithstanding, it also depends on the technology (digital or otherwise) that one has access to in order to enact communication. Technology can be seen as an application of means to achieve ends (Heidegger, 1978), nevertheless the ends (or goals) are also fungible or malleable (Blitz, 2014). Therefore, when considering digital technologies that enable communication, they should viewed as active, rather than subservient or passive, actors that both serve and shape that which they were originally designed to enable. The same can be said of the models and theories that are used to understand and analyse communication inside the design process.

According to Sperber and Wilson, there are two main categories of communication models: code-based and inferential. Code models of communication have in common the fact that a communication encodes their message into a signal, which thereafter is decoded by the audience using an identical copy of the code (Wilson and Sperber, 2008, p.607). Inferential models, on the other hand, posit that a sender “provides evidence of her intention to convey a certain meaning”, which thereafter is inferred by the audience based on evidence that has been provided and that is available (i.e., context). In other words, the act of encoding and decoding is not purely deterministic—or mechanical. It is rather a potentially non-deterministic process which involves various contextual enrichment mechanisms. As follows, both Shannon’s archetypal code model of communication (which has evolved from the engineering requirements of modern technology), as well as inferential models of communication which aim to capture the social dynamics of communication will be analysed. This will allow for the necessary context for this research project to emerge. Its digitally-oriented and applied nature would, at first sight, warrant a purely technical approach to communication. Nevertheless, providing an inferential approach more closely tied with the psycho-social aspects of communication will enable a much more relevant analysis.

2.4.2 Code model of communication

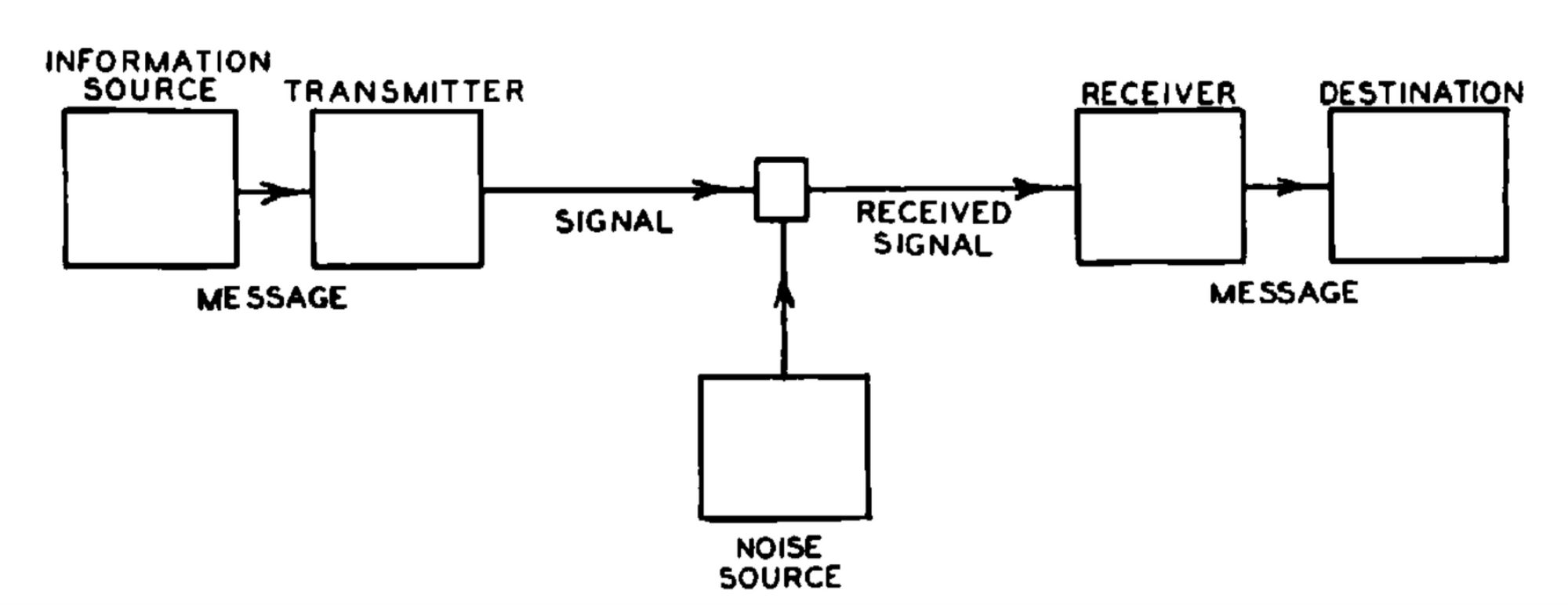

The Shannon-Weaver model of communication has been hugely influential and has been widely adopted in social sciences, biology, psychology, organisational research, etc. It introduced an integrated model of information exchange (Figure 4) that incorporates the concepts of information source, transmitter, receiver, signal, channel, noise, encoding, decoding, etc. into one unifying theory (Shannon, 1948).

Figure 4: Shannon’s diagram of the communication model (Shannon, 1948).

According to Shannon, a communication system consists of five main parts:

- An information source which produces a message or sequence of messages to be communicated to the receiving terminal. […]

- A transmitter which operates on the message in some way to produce a signal suitable for transmission over the channel. […]

- The channel is merely the medium used to transmit the signal from transmitter to receiver. […]

- The receiver ordinarily performs the inverse operation of that done by the transmitter, reconstructing the message from the signal.

- The destination is the person (or thing) for whom the message is intended.” (Shannon, 1948)

It is important to note that, previous to 1948, communication was mostly an engineering-related discipline, and it concerned itself with the practical problems posed by the advent of the telegraph (1830), telephone (1876), wireless telegraph (1887), radio (1900), television (1925), etc. (Verdu, 1998). Shannon’s theory emerged from this highly technical background and its ground-breaking contribution[6] was to establish a scientific base on which a whole new field—information theory—was developed and consequently led to the development of the digital age.

Nevertheless, Shannon frames his theory clearly in an engineering and scientific context. He acknowledges that messages have meaning: “they [messages] refer to or are correlated according to some system with certain physical or conceptual entities” (Shannon, 1948, p. 379) but he clearly differentiates his work from this view: “these semantic aspects of communication are irrelevant to the engineering problem” (ibid., p. 380). In the book that presented Shannon’s theory to a general audience, Weaver underlines the fact that Shannon’s model is purely technical, as opposed to semantic or qualitative. Consequently, “information must not be equated with meaning” (Shannon and Weaver, 1963). Nevertheless, earlier in this chapter, it has been shown how Tsoukas, nearly five decades later, criticises modern society’s decontextualised approach to information. In turn, this can be interpreted as the consequence of dismissing Shannon’s communication theory context, against both his and Weaver’s arguments. The distancing of information from its natural semantic and social embedding can also be traced to BICRP’s evolving understanding of the problem within the construction industry: from an initially clear and “tame” problem dealing with linear information exchanges, it became an unquantifiable “psycho-social” system full of informal qualities. As Tsoukas would put it, in a social context, “disembodied” information is detrimental to the process of communication.

2.4.3 Inferential models of communication

Consequently, the appropriation of Shannon’s model in other domains may carry across the marginalisation of the semantic aspects of communication, to the potential detriment of the respective field. Howard Garfinkel, a contemporary of Shannon who is known for his role in establishing ethnomethodology and conversation analysis as scientific fields, developed a separate information and communication theory, that was, nevertheless, not published until 40 years later. He counterpoints Shannon’s and his precursor’s technical models with a social theory of information, one of its most important tenets being that, according to Rawls, “information is not only put to social uses, but becomes information in the first place through situated social practices” (Garfinkel and Rawls, 2006, p. 11). His prior critique of quantification is similar to the one of Tsoukas’ and Strathern’s, namely that reducing social order to general trends removes unquantifiable—qualitative and detailed—processes that are crucial to understand it in the first place. To Garfinkel, information is not something that is recalled from an abstract storage, but is something that is re-created out of the “resources of the available order of possibilities of experience, available sensory materials, actions, etc.” (ibid., p. 158).

Garfinkel posits that information, from a social point of view, accumulates over the course of an exchange. Thus, previously transmitted information continuously enriches the context of its current interpretation and facilitates its transformation into meaning. An important condition underpinning this is, according to Garfinkel, continuity: interactions are seen to have a given “time horizon” of succession, which, if surpassed, causes an interruption in the “stream of experience” and thus breaks the communicative process (Garfinkel and Rawls, 2006, p. 186). Memory is a set of operations by which a previous meaningful experience can be reproduced or represented (ibid., 2006, p. 159). This describes a picture of communication in which information processes and social interaction are interwoven. Consequently, Garfinkel sees communication not just as a deterministic exchange of symbols that Shannon’s model describes, but a cooperative process through which actors, through an ordered exchange of symbols, facilitate mutual intelligibility (ibid., p. 15). As such, it is important to note that the cooperative aspect of communication is an important notion: not restricted to Garfinkel’s work, it surfaces as well in Grice’s inferential model of communication, which shall be shortly brought forward. Moreover, it is inherently linked to the same concept present in the previous analysis of normative approaches to design and wicked problem resolution.

In 1975, Grice introduced the “Cooperative Principle” in his essay Logic and Conversation. It describes how receivers and senders of information must act cooperatively and mutually accept each other in order to be understood. In other words, “make your conversational contribution such as is required at the stage at which it occurs, by the accepted purpose or direction of talk exchange in which you are currently engaged” (Grice, 1991, p. 27) and according to Grice, any rational conversation must follow it. It comprises of four maxims, namely: (1) the maxim of quality, (2) the maxim of quantity, (3) the maxim of relevance, (4) the maxim of manner, which are summarised below:

“(1) quantity: make your contribution as informative as it is required; do not make your contribution more informative than it is required […]

(2) quality: make your contribution one that it is true […]

(3) relation: “be relevant” […]

(4) manner: avoid obscurity and ambiguity, be brief and orderly […]”

(Grice, 1991, p. 26-28)

Grice notes that the cooperative principle does not apply only to verbal exchanges, but also to any cooperative transactions, such as those happening in the AEC industries. The maxim of quantity can be exemplified as: if a structural engineer is assigned with a load analysis of a building, he will most probably expect to receive not the full detailed 3D model, but the schematic engineering model. Similarly, the maxim of quality: the architect expects back real volumetric dimensions of the structural elements, not spurious ones. The maxim of relevance: the person in charge of quantifications of the project will expect to receive the gross dimensions for the building elements, and not the fire escape plan. The maxim of manner implies that participants expect of each other that they “execute their performance with reasonable dispatch” (Grice, 1991, p. 28).

Earlier in this chapter it has been shown that cooperation inside varied stakeholder networks does not necessarily imply a wholly non-adversarial context (one in which relationships are fully positive). Trust and mistrust can coexist and, furthermore, different disciplines, domains or actors may have conflicting definitions of value. Grice, when analysing the features of cooperative transactions, takes this fact into account and elegantly says that, while participants must have some common immediate aim, their ultimate aims may vary: they can be independent of each or even in conflict. In other words, an altruistic architect’s ultimate goal might be to design the best building as defined, for example, aesthetically; a developer’s ultimate goal might be to, for instance, maximise his profit. Even if these two actor’s goals are in conflict, they will engage in cooperative transactions in order to resolve—and define—sub-tasks that will progress their individual goals. Furthermore, Grice reinforces Garfinkel’s view of the importance of sequentiality (a linear succession of events) in communication. The second feature of cooperative transactions that he identifies is that the contributions of the participants should be mutually dependent, or “dovetailed” (Grice, 1991, p. 29). In essence, transactions in which information is shared, but not used—or does not become depended upon—are not cooperative. An actor that unilaterally broadcasts information blindly is adding “noise“ to the system, as the information sent does not enrich the context in which it can be interpreted and thus prevents its transformation into meaning, as described by Garfinkel[7], and, as such, is not relevant.

The issue of relevance becomes central in the work of Sperber and Wilson. By building on the inferential model of communication outlined by Grice, they expand the third maxim—“be relevant”—and propose a complementary theory of communication, namely relevance theory. Its central claim is that “the expectations of relevance raised by an utterance are precise and predictable enough to guide the hearer towards the speaker’s meaning” (Wilson and Sperber, 2008, p. 607). This is based on the fact that when something is communicated, expectations are automatically created as to their quantity, quality, relation and manner. The receiver, based on these expectations, is thereafter inferring the meaning of the speaker’s communicated information. Sperber and Wilson, nevertheless, question the rigidity of Grice’s four maxims and propose an alternative theory informed by human cognition. They state that human cognition is geared towards the maximisation of relevance, and that this alone is sufficient to explain inferential communication (ibid., p. 609).

An input is relevant to an individual when it “connects with background information he has available to yield conclusions that matter to him” (ibid., p. 610). For example, the information that “there are delays on the Victoria line” (input) can lead to a complete reorganisation of an individual’s travel arrangements (context), as there would not be enough time to catch the subsequent connection: the individual would potentially even opt for a complete different means of public transport. Such a cognitive effect is described by Sperber and Wilson as being positive, as opposed to passive. The later, when contextualised, does not trigger any major actions or the generation of new knowledge (for example, “water is wet”). Conversely, the former enables a change in an individual’s representation of the world. Taking the example above, if the individual were not to need the Victoria line, they wouldn’t benefit from any positive cognitive effect; nevertheless, since they do need to get to King’s Cross and their current fastest option is taking the Victoria line from Vauxhall, the announcement of the delays prompt him to take the Northern line from nearby Oval. As such, the input did have a positive cognitive effect—the receiver’s understanding of the world changed.